I've been involved with a lot of implementations and upgrades over the years and I'm certain that every one of them had hit a snag at one point or another. Whether miscommunication, improper training, or technical issues, all of these needed one step to get to the solution - an evaluation of where the problem lived.

Not just what issue are we dealing with, but where is the actual problem?

Is it a bug?

One project I was involved with had major allocation issues in the storeroom. Items were being allocated to orders before the item was even in stock. This appeared to be a major bug that would require escalation to the vendor before the client could go live, and that was the path the project manager was taking to get to a resolution.

I was one of several supply chain consultants, primarily brought in to help with pre-go live training and go live support. In other words, I was just there to help the team cross the finish line.

I don't recall if I was asked or if I just overheard about 'the bug' that was causing frustration, but I decided to look at the system settings.

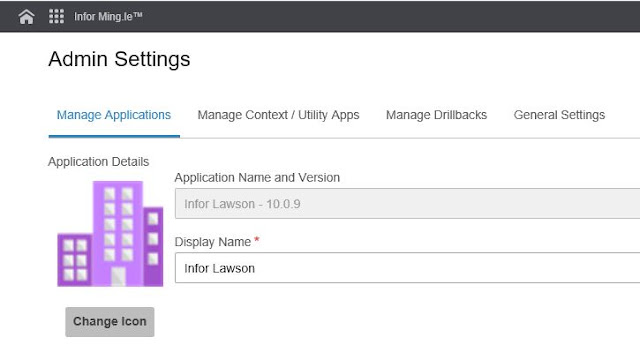

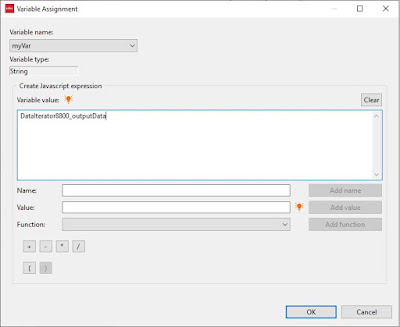

The flag that allowed allocation on open PO's was set to 'No' correctly, yet the system was allowing it. That made no sense, so I kept digging and eventually found another setting that seemed to be contrary to the no allocation setting.

I don't remember the actual setting now, but I do remember that it seemed to allow for allocations when an item was on order (not yet received) and so I brought my discovery to the project leaders.

Expecting a high five for my doggedness, I was surprised when I was told that my discovery could not be the cause of the issue since the other allocation flag was set to no.

To their credit, however, they did allow me to run through some system tests and I found that this one setting, against all logic, was in fact where the problem lived.

Issue solved, go live saved, bonus awarded (not really). By this point everyone was just ready to get the issue behind them and focus on the important go live.

Not just answers, providing solutions